Tutorial part 4: Adding JIT-compilation to a toy interpreter#

In this example we construct a “toy” interpreter, and add JIT-compilation to it.

Our toy interpreter#

It’s a stack-based interpreter, and is intended as a (very simple) example of the kind of bytecode interpreter seen in dynamic languages such as Python, Ruby etc.

For the sake of simplicity, our toy virtual machine is very limited:

The only data type is int

It can only work on one function at a time (so that the only function call that can be made is to recurse).

Functions can only take one parameter.

Functions have a stack of int values.

We’ll implement function call within the interpreter by calling a function in our implementation, rather than implementing our own frame stack.

The parser is only good enough to get the examples to work.

Naturally, a real interpreter would be much more complicated that this.

The following operations are supported:

Operation |

Meaning |

Old Stack |

New Stack |

|---|---|---|---|

DUP |

Duplicate top of stack. |

|

|

ROT |

Swap top two elements of stack. |

|

|

BINARY_ADD |

Add the top two elements on the stack. |

|

|

BINARY_SUBTRACT |

Likewise, but subtract. |

|

|

BINARY_MULT |

Likewise, but multiply. |

|

|

BINARY_COMPARE_LT |

Compare the top two elements on the stack and push a nonzero/zero if (x<y). |

|

|

RECURSE |

Recurse, passing the top of the stack, and popping the result. |

|

|

RETURN |

Return the top of the stack. |

|

|

PUSH_CONST arg |

Push an int const. |

|

|

JUMP_ABS_IF_TRUE arg |

Pop; if top of stack was nonzero, jump to |

|

|

Programs can be interpreted, disassembled, and compiled to machine code.

The interpreter reads .toy scripts. Here’s what a simple recursive

factorial program looks like, the script factorial.toy.

The parser ignores lines beginning with a #.

# Simple recursive factorial implementation, roughly equivalent to: # # int factorial (int arg) # { # if (arg < 2) # return arg # return arg * factorial (arg - 1) # } # Initial state: # stack: [arg] # 0: DUP # stack: [arg, arg] # 1: PUSH_CONST 2 # stack: [arg, arg, 2] # 2: BINARY_COMPARE_LT # stack: [arg, (arg < 2)] # 3: JUMP_ABS_IF_TRUE 9 # stack: [arg] # 4: DUP # stack: [arg, arg] # 5: PUSH_CONST 1 # stack: [arg, arg, 1] # 6: BINARY_SUBTRACT # stack: [arg, (arg - 1) # 7: RECURSE # stack: [arg, factorial(arg - 1)] # 8: BINARY_MULT # stack: [arg * factorial(arg - 1)] # 9: RETURN

The interpreter is a simple infinite loop with a big switch statement

based on what the next opcode is:

static int toyvm_function_interpret (toyvm_function *fn, int arg, FILE *trace) { toyvm_frame frame; #define PUSH(ARG) (toyvm_frame_push (&frame, (ARG))) #define POP(ARG) (toyvm_frame_pop (&frame)) frame.frm_function = fn; frame.frm_pc = 0; frame.frm_cur_depth = 0; PUSH (arg); while (1) { toyvm_op *op; int x, y; assert (frame.frm_pc < fn->fn_num_ops); op = &fn->fn_ops[frame.frm_pc++]; if (trace) { toyvm_frame_dump_stack (&frame, trace); toyvm_function_disassemble_op (fn, op, frame.frm_pc, trace); } switch (op->op_opcode) { /* Ops taking no operand. */ case DUP: x = POP (); PUSH (x); PUSH (x); break; case ROT: y = POP (); x = POP (); PUSH (y); PUSH (x); break; case BINARY_ADD: y = POP (); x = POP (); PUSH (x + y); break; case BINARY_SUBTRACT: y = POP (); x = POP (); PUSH (x - y); break; case BINARY_MULT: y = POP (); x = POP (); PUSH (x * y); break; case BINARY_COMPARE_LT: y = POP (); x = POP (); PUSH (x < y); break; case RECURSE: x = POP (); x = toyvm_function_interpret (fn, x, trace); PUSH (x); break; case RETURN: return POP (); /* Ops taking an operand. */ case PUSH_CONST: PUSH (op->op_operand); break; case JUMP_ABS_IF_TRUE: x = POP (); if (x) frame.frm_pc = op->op_operand; break; default: assert (0); /* unknown opcode */ } /* end of switch on opcode */ } /* end of while loop */ #undef PUSH #undef POP }

Compiling to machine code#

We want to generate machine code that can be cast to this type and then directly executed in-process:

typedef int (*toyvm_compiled_code) (int);

The lifetime of the code is tied to that of a gcc_jit_result*.

We’ll handle this by bundling them up in a structure, so that we can

clean them up together by calling gcc_jit_result_release():

struct toyvm_compiled_function { gcc_jit_result *cf_jit_result; toyvm_compiled_code cf_code; };

Our compiler isn’t very sophisticated; it takes the implementation of each opcode above, and maps it directly to the operations supported by the libgccjit API.

How should we handle the stack? In theory we could calculate what the

stack depth will be at each opcode, and optimize away the stack

manipulation “by hand”. We’ll see below that libgccjit is able to do

this for us, so we’ll implement stack manipulation

in a direct way, by creating a stack array and stack_depth

variables, local within the generated function, equivalent to this C code:

int stack_depth;

int stack[MAX_STACK_DEPTH];

We’ll also have local variables x and y for use when implementing

the opcodes, equivalent to this:

int x;

int y;

This means our compiler has the following state:

struct compilation_state { gcc_jit_context *ctxt; gcc_jit_type *int_type; gcc_jit_type *bool_type; gcc_jit_type *stack_type; /* int[MAX_STACK_DEPTH] */ gcc_jit_rvalue *const_one; gcc_jit_function *fn; gcc_jit_param *param_arg; gcc_jit_lvalue *stack; gcc_jit_lvalue *stack_depth; gcc_jit_lvalue *x; gcc_jit_lvalue *y; gcc_jit_location *op_locs[MAX_OPS]; gcc_jit_block *initial_block; gcc_jit_block *op_blocks[MAX_OPS]; };

Setting things up#

First we create our types:

state.int_type = gcc_jit_context_get_type (state.ctxt, GCC_JIT_TYPE_INT); state.bool_type = gcc_jit_context_get_type (state.ctxt, GCC_JIT_TYPE_BOOL); state.stack_type = gcc_jit_context_new_array_type (state.ctxt, NULL, state.int_type, MAX_STACK_DEPTH);

along with extracting a useful int constant:

state.const_one = gcc_jit_context_one (state.ctxt, state.int_type);

We’ll implement push and pop in terms of the stack array and

stack_depth. Here are helper functions for adding statements to

a block, implementing pushing and popping values:

static void add_push (compilation_state *state, gcc_jit_block *block, gcc_jit_rvalue *rvalue, gcc_jit_location *loc) { /* stack[stack_depth] = RVALUE */ gcc_jit_block_add_assignment ( block, loc, /* stack[stack_depth] */ gcc_jit_context_new_array_access ( state->ctxt, loc, gcc_jit_lvalue_as_rvalue (state->stack), gcc_jit_lvalue_as_rvalue (state->stack_depth)), rvalue); /* "stack_depth++;". */ gcc_jit_block_add_assignment_op ( block, loc, state->stack_depth, GCC_JIT_BINARY_OP_PLUS, state->const_one); } static void add_pop (compilation_state *state, gcc_jit_block *block, gcc_jit_lvalue *lvalue, gcc_jit_location *loc) { /* "--stack_depth;". */ gcc_jit_block_add_assignment_op ( block, loc, state->stack_depth, GCC_JIT_BINARY_OP_MINUS, state->const_one); /* "LVALUE = stack[stack_depth];". */ gcc_jit_block_add_assignment ( block, loc, lvalue, /* stack[stack_depth] */ gcc_jit_lvalue_as_rvalue ( gcc_jit_context_new_array_access ( state->ctxt, loc, gcc_jit_lvalue_as_rvalue (state->stack), gcc_jit_lvalue_as_rvalue (state->stack_depth)))); }

We will support single-stepping through the generated code in the

debugger, so we need to create gcc_jit_location instances, one

per operation in the source code. These will reference the lines of

e.g. factorial.toy.

for (pc = 0; pc < fn->fn_num_ops; pc++) { toyvm_op *op = &fn->fn_ops[pc]; state.op_locs[pc] = gcc_jit_context_new_location (state.ctxt, fn->fn_filename, op->op_linenum, 0); /* column */ }

Let’s create the function itself. As usual, we create its parameter first, then use the parameter to create the function:

state.param_arg = gcc_jit_context_new_param (state.ctxt, state.op_locs[0], state.int_type, "arg"); state.fn = gcc_jit_context_new_function (state.ctxt, state.op_locs[0], GCC_JIT_FUNCTION_EXPORTED, state.int_type, funcname, 1, &state.param_arg, 0);

We create the locals within the function.

state.stack = gcc_jit_function_new_local (state.fn, NULL, state.stack_type, "stack"); state.stack_depth = gcc_jit_function_new_local (state.fn, NULL, state.int_type, "stack_depth"); state.x = gcc_jit_function_new_local (state.fn, NULL, state.int_type, "x"); state.y = gcc_jit_function_new_local (state.fn, NULL, state.int_type, "y");

Populating the function#

There’s some one-time initialization, and the API treats the first block you create as the entrypoint of the function, so we need to create that block first:

state.initial_block = gcc_jit_function_new_block (state.fn, "initial");

We can now create blocks for each of the operations. Most of these will be consolidated into larger blocks when the optimizer runs.

for (pc = 0; pc < fn->fn_num_ops; pc++) { char buf[100]; sprintf (buf, "instr%i", pc); state.op_blocks[pc] = gcc_jit_function_new_block (state.fn, buf); }

Now that we have a block it can jump to when it’s done, we can populate the initial block:

/* "stack_depth = 0;". */ gcc_jit_block_add_assignment ( state.initial_block, state.op_locs[0], state.stack_depth, gcc_jit_context_zero (state.ctxt, state.int_type)); /* "PUSH (arg);". */ add_push (&state, state.initial_block, gcc_jit_param_as_rvalue (state.param_arg), state.op_locs[0]); /* ...and jump to insn 0. */ gcc_jit_block_end_with_jump (state.initial_block, state.op_locs[0], state.op_blocks[0]);

We can now populate the blocks for the individual operations. We loop through them, adding instructions to their blocks:

for (pc = 0; pc < fn->fn_num_ops; pc++) { gcc_jit_location *loc = state.op_locs[pc]; gcc_jit_block *block = state.op_blocks[pc]; gcc_jit_block *next_block = (pc < fn->fn_num_ops ? state.op_blocks[pc + 1] : NULL); toyvm_op *op; op = &fn->fn_ops[pc];

We’re going to have another big switch statement for implementing

the opcodes, this time for compiling them, rather than interpreting

them. It’s helpful to have macros for implementing push and pop, so that

we can make the switch statement that’s coming up look as much as

possible like the one above within the interpreter:

#define X_EQUALS_POP()\

add_pop (&state, block, state.x, loc)

#define Y_EQUALS_POP()\

add_pop (&state, block, state.y, loc)

#define PUSH_RVALUE(RVALUE)\

add_push (&state, block, (RVALUE), loc)

#define PUSH_X()\

PUSH_RVALUE (gcc_jit_lvalue_as_rvalue (state.x))

#define PUSH_Y() \

PUSH_RVALUE (gcc_jit_lvalue_as_rvalue (state.y))

Note

A particularly clever implementation would have an identical

switch statement shared by the interpreter and the compiler, with

some preprocessor “magic”. We’re not doing that here, for the sake

of simplicity.

When I first implemented this compiler, I accidentally missed an edit

when copying and pasting the Y_EQUALS_POP macro, so that popping the

stack into y instead erroneously assigned it to x, leaving y

uninitialized.

To track this kind of thing down, we can use

gcc_jit_block_add_comment() to add descriptive comments

to the internal representation. This is invaluable when looking through

the generated IR for, say factorial:

gcc_jit_block_add_comment (block, loc, opcode_names[op->op_opcode]);

We can now write the big switch statement that implements the

individual opcodes, populating the relevant block with statements:

switch (op->op_opcode) { case DUP: X_EQUALS_POP (); PUSH_X (); PUSH_X (); break; case ROT: Y_EQUALS_POP (); X_EQUALS_POP (); PUSH_Y (); PUSH_X (); break; case BINARY_ADD: Y_EQUALS_POP (); X_EQUALS_POP (); PUSH_RVALUE ( gcc_jit_context_new_binary_op ( state.ctxt, loc, GCC_JIT_BINARY_OP_PLUS, state.int_type, gcc_jit_lvalue_as_rvalue (state.x), gcc_jit_lvalue_as_rvalue (state.y))); break; case BINARY_SUBTRACT: Y_EQUALS_POP (); X_EQUALS_POP (); PUSH_RVALUE ( gcc_jit_context_new_binary_op ( state.ctxt, loc, GCC_JIT_BINARY_OP_MINUS, state.int_type, gcc_jit_lvalue_as_rvalue (state.x), gcc_jit_lvalue_as_rvalue (state.y))); break; case BINARY_MULT: Y_EQUALS_POP (); X_EQUALS_POP (); PUSH_RVALUE ( gcc_jit_context_new_binary_op ( state.ctxt, loc, GCC_JIT_BINARY_OP_MULT, state.int_type, gcc_jit_lvalue_as_rvalue (state.x), gcc_jit_lvalue_as_rvalue (state.y))); break; case BINARY_COMPARE_LT: Y_EQUALS_POP (); X_EQUALS_POP (); PUSH_RVALUE ( /* cast of bool to int */ gcc_jit_context_new_cast ( state.ctxt, loc, /* (x < y) as a bool */ gcc_jit_context_new_comparison ( state.ctxt, loc, GCC_JIT_COMPARISON_LT, gcc_jit_lvalue_as_rvalue (state.x), gcc_jit_lvalue_as_rvalue (state.y)), state.int_type)); break; case RECURSE: { X_EQUALS_POP (); gcc_jit_rvalue *arg = gcc_jit_lvalue_as_rvalue (state.x); PUSH_RVALUE ( gcc_jit_context_new_call ( state.ctxt, loc, state.fn, 1, &arg)); break; } case RETURN: X_EQUALS_POP (); gcc_jit_block_end_with_return ( block, loc, gcc_jit_lvalue_as_rvalue (state.x)); break; /* Ops taking an operand. */ case PUSH_CONST: PUSH_RVALUE ( gcc_jit_context_new_rvalue_from_int ( state.ctxt, state.int_type, op->op_operand)); break; case JUMP_ABS_IF_TRUE: X_EQUALS_POP (); gcc_jit_block_end_with_conditional ( block, loc, /* "(bool)x". */ gcc_jit_context_new_cast ( state.ctxt, loc, gcc_jit_lvalue_as_rvalue (state.x), state.bool_type), state.op_blocks[op->op_operand], /* on_true */ next_block); /* on_false */ break; default: assert(0); } /* end of switch on opcode */

Every block must be terminated, via a call to one of the

gcc_jit_block_end_with_ entrypoints. This has been done for two

of the opcodes, but we need to do it for the other ones, by jumping

to the next block.

if (op->op_opcode != JUMP_ABS_IF_TRUE && op->op_opcode != RETURN) gcc_jit_block_end_with_jump ( block, loc, next_block);

This is analogous to simply incrementing the program counter.

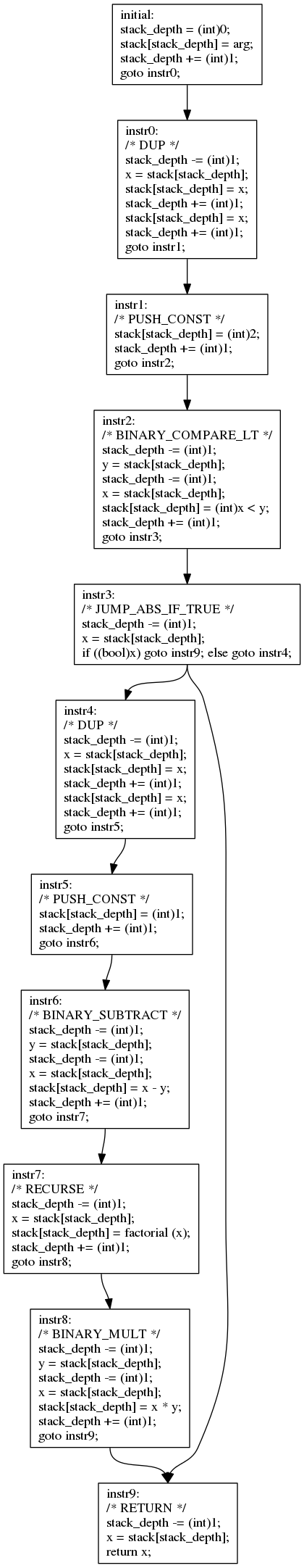

Verifying the control flow graph#

Having finished looping over the blocks, the context is complete.

As before, we can verify that the control flow and statements are sane by

using gcc_jit_function_dump_to_dot():

gcc_jit_function_dump_to_dot (state.fn, "/tmp/factorial.dot");

and viewing the result. Note how the label names, comments, and variable names show up in the dump, to make it easier to spot errors in our compiler.

Compiling the context#

Having finished looping over the blocks and populating them with statements, the context is complete.

We can now compile it, and extract machine code from the result:

gcc_jit_result *jit_result = gcc_jit_context_compile (state.ctxt); gcc_jit_context_release (state.ctxt); toyvm_compiled_function *toyvm_result = (toyvm_compiled_function *)calloc (1, sizeof (toyvm_compiled_function)); if (!toyvm_result) { fprintf (stderr, "out of memory allocating toyvm_compiled_function\n"); gcc_jit_result_release (jit_result); return NULL; } toyvm_result->cf_jit_result = jit_result; toyvm_result->cf_code = (toyvm_compiled_code)gcc_jit_result_get_code (jit_result, funcname);

We can now run the result:

toyvm_compiled_function *compiled_fn = toyvm_function_compile (fn); toyvm_compiled_code code = compiled_fn->cf_code; printf ("compiler result: %d\n", code (atoi (argv[2]))); gcc_jit_result_release (compiled_fn->cf_jit_result); free (compiled_fn);

Single-stepping through the generated code#

It’s possible to debug the generated code. To do this we need to both:

Set up source code locations for our statements, so that we can meaningfully step through the code. We did this above by calling

gcc_jit_context_new_location()and using the results.Enable the generation of debugging information, by setting

GCC_JIT_BOOL_OPTION_DEBUGINFOon thegcc_jit_contextviagcc_jit_context_set_bool_option():gcc_jit_context_set_bool_option ( ctxt, GCC_JIT_BOOL_OPTION_DEBUGINFO, 1);

Having done this, we can put a breakpoint on the generated function:

$ gdb --args ./toyvm factorial.toy 10

(gdb) break factorial

Function "factorial" not defined.

Make breakpoint pending on future shared library load? (y or [n]) y

Breakpoint 1 (factorial) pending.

(gdb) run

Breakpoint 1, factorial (arg=10) at factorial.toy:14

14 DUP

We’ve set up location information, which references factorial.toy.

This allows us to use e.g. list to see where we are in the script:

(gdb) list

9

10 # Initial state:

11 # stack: [arg]

12

13 # 0:

14 DUP

15 # stack: [arg, arg]

16

17 # 1:

18 PUSH_CONST 2

and to step through the function, examining the data:

(gdb) n

18 PUSH_CONST 2

(gdb) n

22 BINARY_COMPARE_LT

(gdb) print stack

$5 = {10, 10, 2, 0, -7152, 32767, 0, 0}

(gdb) print stack_depth

$6 = 3

You’ll see that the parts of the stack array that haven’t been

touched yet are uninitialized.

Note

Turning on optimizations may lead to unpredictable results when stepping through the generated code: the execution may appear to “jump around” the source code. This is analogous to turning up the optimization level in a regular compiler.

Examining the generated code#

How good is the optimized code?

We can turn up optimizations, by calling

gcc_jit_context_set_int_option() with

GCC_JIT_INT_OPTION_OPTIMIZATION_LEVEL:

gcc_jit_context_set_int_option (

ctxt,

GCC_JIT_INT_OPTION_OPTIMIZATION_LEVEL,

3);

One of GCC’s internal representations is called “gimple”. A dump of the initial gimple representation of the code can be seen by setting:

gcc_jit_context_set_bool_option (ctxt,

GCC_JIT_BOOL_OPTION_DUMP_INITIAL_GIMPLE,

1);

With optimization on and source locations displayed, this gives:

factorial (signed int arg)

{

<unnamed type> D.80;

signed int D.81;

signed int D.82;

signed int D.83;

signed int D.84;

signed int D.85;

signed int y;

signed int x;

signed int stack_depth;

signed int stack[8];

try

{

initial:

stack_depth = 0;

stack[stack_depth] = arg;

stack_depth = stack_depth + 1;

goto instr0;

instr0:

/* DUP */:

stack_depth = stack_depth + -1;

x = stack[stack_depth];

stack[stack_depth] = x;

stack_depth = stack_depth + 1;

stack[stack_depth] = x;

stack_depth = stack_depth + 1;

goto instr1;

instr1:

/* PUSH_CONST */:

stack[stack_depth] = 2;

stack_depth = stack_depth + 1;

goto instr2;

/* etc */

You can see the generated machine code in assembly form via:

gcc_jit_context_set_bool_option (

ctxt,

GCC_JIT_BOOL_OPTION_DUMP_GENERATED_CODE,

1);

result = gcc_jit_context_compile (ctxt);

which shows that (on this x86_64 box) the compiler has unrolled the loop and is using MMX instructions to perform several multiplications simultaneously:

.file "fake.c"

.text

.Ltext0:

.p2align 4,,15

.globl factorial

.type factorial, @function

factorial:

.LFB0:

.file 1 "factorial.toy"

.loc 1 14 0

.cfi_startproc

.LVL0:

.L2:

.loc 1 26 0

cmpl $1, %edi

jle .L13

leal -1(%rdi), %edx

movl %edx, %ecx

shrl $2, %ecx

leal 0(,%rcx,4), %esi

testl %esi, %esi

je .L14

cmpl $9, %edx

jbe .L14

leal -2(%rdi), %eax

movl %eax, -16(%rsp)

leal -3(%rdi), %eax

movd -16(%rsp), %xmm0

movl %edi, -16(%rsp)

movl %eax, -12(%rsp)

movd -16(%rsp), %xmm1

xorl %eax, %eax

movl %edx, -16(%rsp)

movd -12(%rsp), %xmm4

movd -16(%rsp), %xmm6

punpckldq %xmm4, %xmm0

movdqa .LC1(%rip), %xmm4

punpckldq %xmm6, %xmm1

punpcklqdq %xmm0, %xmm1

movdqa .LC0(%rip), %xmm0

jmp .L5

# etc - edited for brevity

This is clearly overkill for a function that will likely overflow the

int type before the vectorization is worthwhile - but then again, this

is a toy example.

Turning down the optimization level to 2:

gcc_jit_context_set_int_option (

ctxt,

GCC_JIT_INT_OPTION_OPTIMIZATION_LEVEL,

3);

yields this code, which is simple enough to quote in its entirety:

.file "fake.c"

.text

.p2align 4,,15

.globl factorial

.type factorial, @function

factorial:

.LFB0:

.cfi_startproc

.L2:

cmpl $1, %edi

jle .L8

movl $1, %edx

jmp .L4

.p2align 4,,10

.p2align 3

.L6:

movl %eax, %edi

.L4:

.L5:

leal -1(%rdi), %eax

imull %edi, %edx

cmpl $1, %eax

jne .L6

.L3:

.L7:

imull %edx, %eax

ret

.L8:

movl %edi, %eax

movl $1, %edx

jmp .L7

.cfi_endproc

.LFE0:

.size factorial, .-factorial

.ident "GCC: (GNU) 4.9.0 20131023 (Red Hat 0.2)"

.section .note.GNU-stack,"",@progbits

Note that the stack pushing and popping have been eliminated, as has the recursive call (in favor of an iteration).

Putting it all together#

The complete example can be seen in the source tree at

gcc/jit/docs/examples/tut04-toyvm/toyvm.c

along with a Makefile and a couple of sample .toy scripts:

$ ls -al

drwxrwxr-x. 2 david david 4096 Sep 19 17:46 .

drwxrwxr-x. 3 david david 4096 Sep 19 15:26 ..

-rw-rw-r--. 1 david david 615 Sep 19 12:43 factorial.toy

-rw-rw-r--. 1 david david 834 Sep 19 13:08 fibonacci.toy

-rw-rw-r--. 1 david david 238 Sep 19 14:22 Makefile

-rw-rw-r--. 1 david david 16457 Sep 19 17:07 toyvm.c

$ make toyvm

g++ -Wall -g -o toyvm toyvm.c -lgccjit

$ ./toyvm factorial.toy 10

interpreter result: 3628800

compiler result: 3628800

$ ./toyvm fibonacci.toy 10

interpreter result: 55

compiler result: 55

Behind the curtain: How does our code get optimized?#

Our example is done, but you may be wondering about exactly how the compiler turned what we gave it into the machine code seen above.

We can examine what the compiler is doing in detail by setting:

gcc_jit_context_set_bool_option (state.ctxt,

GCC_JIT_BOOL_OPTION_DUMP_EVERYTHING,

1);

gcc_jit_context_set_bool_option (state.ctxt,

GCC_JIT_BOOL_OPTION_KEEP_INTERMEDIATES,

1);

This will dump detailed information about the compiler’s state to a

directory under /tmp, and keep it from being cleaned up.

The precise names and their formats of these files is subject to change. Higher optimization levels lead to more files. Here’s what I saw (edited for brevity; there were almost 200 files):

intermediate files written to /tmp/libgccjit-KPQbGw

$ ls /tmp/libgccjit-KPQbGw/

fake.c.000i.cgraph

fake.c.000i.type-inheritance

fake.c.004t.gimple

fake.c.007t.omplower

fake.c.008t.lower

fake.c.011t.eh

fake.c.012t.cfg

fake.c.014i.visibility

fake.c.015i.early_local_cleanups

fake.c.016t.ssa

# etc

The gimple code is converted into Static Single Assignment form, with annotations for use when generating the debuginfo:

$ less /tmp/libgccjit-KPQbGw/fake.c.016t.ssa

;; Function factorial (factorial, funcdef_no=0, decl_uid=53, symbol_order=0)

factorial (signed int arg)

{

signed int stack[8];

signed int stack_depth;

signed int x;

signed int y;

<unnamed type> _20;

signed int _21;

signed int _38;

signed int _44;

signed int _51;

signed int _56;

initial:

stack_depth_3 = 0;

# DEBUG stack_depth => stack_depth_3

stack[stack_depth_3] = arg_5(D);

stack_depth_7 = stack_depth_3 + 1;

# DEBUG stack_depth => stack_depth_7

# DEBUG instr0 => NULL

# DEBUG /* DUP */ => NULL

stack_depth_8 = stack_depth_7 + -1;

# DEBUG stack_depth => stack_depth_8

x_9 = stack[stack_depth_8];

# DEBUG x => x_9

stack[stack_depth_8] = x_9;

stack_depth_11 = stack_depth_8 + 1;

# DEBUG stack_depth => stack_depth_11

stack[stack_depth_11] = x_9;

stack_depth_13 = stack_depth_11 + 1;

# DEBUG stack_depth => stack_depth_13

# DEBUG instr1 => NULL

# DEBUG /* PUSH_CONST */ => NULL

stack[stack_depth_13] = 2;

/* etc; edited for brevity */

We can perhaps better see the code by turning off

GCC_JIT_BOOL_OPTION_DEBUGINFO to suppress all those DEBUG

statements, giving:

$ less /tmp/libgccjit-1Hywc0/fake.c.016t.ssa

;; Function factorial (factorial, funcdef_no=0, decl_uid=53, symbol_order=0)

factorial (signed int arg)

{

signed int stack[8];

signed int stack_depth;

signed int x;

signed int y;

<unnamed type> _20;

signed int _21;

signed int _38;

signed int _44;

signed int _51;

signed int _56;

initial:

stack_depth_3 = 0;

stack[stack_depth_3] = arg_5(D);

stack_depth_7 = stack_depth_3 + 1;

stack_depth_8 = stack_depth_7 + -1;

x_9 = stack[stack_depth_8];

stack[stack_depth_8] = x_9;

stack_depth_11 = stack_depth_8 + 1;

stack[stack_depth_11] = x_9;

stack_depth_13 = stack_depth_11 + 1;

stack[stack_depth_13] = 2;

stack_depth_15 = stack_depth_13 + 1;

stack_depth_16 = stack_depth_15 + -1;

y_17 = stack[stack_depth_16];

stack_depth_18 = stack_depth_16 + -1;

x_19 = stack[stack_depth_18];

_20 = x_19 < y_17;

_21 = (signed int) _20;

stack[stack_depth_18] = _21;

stack_depth_23 = stack_depth_18 + 1;

stack_depth_24 = stack_depth_23 + -1;

x_25 = stack[stack_depth_24];

if (x_25 != 0)

goto <bb 4> (instr9);

else

goto <bb 3> (instr4);

instr4:

/* DUP */:

stack_depth_26 = stack_depth_24 + -1;

x_27 = stack[stack_depth_26];

stack[stack_depth_26] = x_27;

stack_depth_29 = stack_depth_26 + 1;

stack[stack_depth_29] = x_27;

stack_depth_31 = stack_depth_29 + 1;

stack[stack_depth_31] = 1;

stack_depth_33 = stack_depth_31 + 1;

stack_depth_34 = stack_depth_33 + -1;

y_35 = stack[stack_depth_34];

stack_depth_36 = stack_depth_34 + -1;

x_37 = stack[stack_depth_36];

_38 = x_37 - y_35;

stack[stack_depth_36] = _38;

stack_depth_40 = stack_depth_36 + 1;

stack_depth_41 = stack_depth_40 + -1;

x_42 = stack[stack_depth_41];

_44 = factorial (x_42);

stack[stack_depth_41] = _44;

stack_depth_46 = stack_depth_41 + 1;

stack_depth_47 = stack_depth_46 + -1;

y_48 = stack[stack_depth_47];

stack_depth_49 = stack_depth_47 + -1;

x_50 = stack[stack_depth_49];

_51 = x_50 * y_48;

stack[stack_depth_49] = _51;

stack_depth_53 = stack_depth_49 + 1;

# stack_depth_1 = PHI <stack_depth_24(2), stack_depth_53(3)>

instr9:

/* RETURN */:

stack_depth_54 = stack_depth_1 + -1;

x_55 = stack[stack_depth_54];

_56 = x_55;

stack ={v} {CLOBBER};

return _56;

}

Note in the above how all the gcc_jit_block instances we

created have been consolidated into just 3 blocks in GCC’s internal

representation: initial, instr4 and instr9.

Optimizing away stack manipulation#

Recall our simple implementation of stack operations. Let’s examine how the stack operations are optimized away.

After a pass of constant-propagation, the depth of the stack at each opcode can be determined at compile-time:

$ less /tmp/libgccjit-1Hywc0/fake.c.021t.ccp1

;; Function factorial (factorial, funcdef_no=0, decl_uid=53, symbol_order=0)

factorial (signed int arg)

{

signed int stack[8];

signed int stack_depth;

signed int x;

signed int y;

<unnamed type> _20;

signed int _21;

signed int _38;

signed int _44;

signed int _51;

initial:

stack[0] = arg_5(D);

x_9 = stack[0];

stack[0] = x_9;

stack[1] = x_9;

stack[2] = 2;

y_17 = stack[2];

x_19 = stack[1];

_20 = x_19 < y_17;

_21 = (signed int) _20;

stack[1] = _21;

x_25 = stack[1];

if (x_25 != 0)

goto <bb 4> (instr9);

else

goto <bb 3> (instr4);

instr4:

/* DUP */:

x_27 = stack[0];

stack[0] = x_27;

stack[1] = x_27;

stack[2] = 1;

y_35 = stack[2];

x_37 = stack[1];

_38 = x_37 - y_35;

stack[1] = _38;

x_42 = stack[1];

_44 = factorial (x_42);

stack[1] = _44;

y_48 = stack[1];

x_50 = stack[0];

_51 = x_50 * y_48;

stack[0] = _51;

instr9:

/* RETURN */:

x_55 = stack[0];

x_56 = x_55;

stack ={v} {CLOBBER};

return x_56;

}

Note how, in the above, all those stack_depth values are now just

constants: we’re accessing specific stack locations at each opcode.

The “esra” pass (“Early Scalar Replacement of Aggregates”) breaks out our “stack” array into individual elements:

$ less /tmp/libgccjit-1Hywc0/fake.c.024t.esra

;; Function factorial (factorial, funcdef_no=0, decl_uid=53, symbol_order=0)

Created a replacement for stack offset: 0, size: 32: stack$0

Created a replacement for stack offset: 32, size: 32: stack$1

Created a replacement for stack offset: 64, size: 32: stack$2

Symbols to be put in SSA form

{ D.89 D.90 D.91 }

Incremental SSA update started at block: 0

Number of blocks in CFG: 5

Number of blocks to update: 4 ( 80%)

factorial (signed int arg)

{

signed int stack$2;

signed int stack$1;

signed int stack$0;

signed int stack[8];

signed int stack_depth;

signed int x;

signed int y;

<unnamed type> _20;

signed int _21;

signed int _38;

signed int _44;

signed int _51;

initial:

stack$0_45 = arg_5(D);

x_9 = stack$0_45;

stack$0_39 = x_9;

stack$1_32 = x_9;

stack$2_30 = 2;

y_17 = stack$2_30;

x_19 = stack$1_32;

_20 = x_19 < y_17;

_21 = (signed int) _20;

stack$1_28 = _21;

x_25 = stack$1_28;

if (x_25 != 0)

goto <bb 4> (instr9);

else

goto <bb 3> (instr4);

instr4:

/* DUP */:

x_27 = stack$0_39;

stack$0_22 = x_27;

stack$1_14 = x_27;

stack$2_12 = 1;

y_35 = stack$2_12;

x_37 = stack$1_14;

_38 = x_37 - y_35;

stack$1_10 = _38;

x_42 = stack$1_10;

_44 = factorial (x_42);

stack$1_6 = _44;

y_48 = stack$1_6;

x_50 = stack$0_22;

_51 = x_50 * y_48;

stack$0_1 = _51;

# stack$0_52 = PHI <stack$0_39(2), stack$0_1(3)>

instr9:

/* RETURN */:

x_55 = stack$0_52;

x_56 = x_55;

stack ={v} {CLOBBER};

return x_56;

}

Hence at this point, all those pushes and pops of the stack are now simply assignments to specific temporary variables.

After some copy propagation, the stack manipulation has been completely optimized away:

$ less /tmp/libgccjit-1Hywc0/fake.c.026t.copyprop1

;; Function factorial (factorial, funcdef_no=0, decl_uid=53, symbol_order=0)

factorial (signed int arg)

{

signed int stack$2;

signed int stack$1;

signed int stack$0;

signed int stack[8];

signed int stack_depth;

signed int x;

signed int y;

<unnamed type> _20;

signed int _21;

signed int _38;

signed int _44;

signed int _51;

initial:

stack$0_39 = arg_5(D);

_20 = arg_5(D) <= 1;

_21 = (signed int) _20;

if (_21 != 0)

goto <bb 4> (instr9);

else

goto <bb 3> (instr4);

instr4:

/* DUP */:

_38 = arg_5(D) + -1;

_44 = factorial (_38);

_51 = arg_5(D) * _44;

stack$0_1 = _51;

# stack$0_52 = PHI <arg_5(D)(2), _51(3)>

instr9:

/* RETURN */:

stack ={v} {CLOBBER};

return stack$0_52;

}

Later on, another pass finally eliminated stack_depth local and the

unused parts of the stack` array altogether:

$ less /tmp/libgccjit-1Hywc0/fake.c.036t.release_ssa

;; Function factorial (factorial, funcdef_no=0, decl_uid=53, symbol_order=0)

Released 44 names, 314.29%, removed 44 holes

factorial (signed int arg)

{

signed int stack$0;

signed int mult_acc_1;

<unnamed type> _5;

signed int _6;

signed int _7;

signed int mul_tmp_10;

signed int mult_acc_11;

signed int mult_acc_13;

# arg_9 = PHI <arg_8(D)(0)>

# mult_acc_13 = PHI <1(0)>

initial:

<bb 5>:

# arg_4 = PHI <arg_9(2), _7(3)>

# mult_acc_1 = PHI <mult_acc_13(2), mult_acc_11(3)>

_5 = arg_4 <= 1;

_6 = (signed int) _5;

if (_6 != 0)

goto <bb 4> (instr9);

else

goto <bb 3> (instr4);

instr4:

/* DUP */:

_7 = arg_4 + -1;

mult_acc_11 = mult_acc_1 * arg_4;

goto <bb 5>;

# stack$0_12 = PHI <arg_4(5)>

instr9:

/* RETURN */:

mul_tmp_10 = mult_acc_1 * stack$0_12;

return mul_tmp_10;

}

Elimination of tail recursion#

Another significant optimization is the detection that the call to

factorial is tail recursion, which can be eliminated in favor of

an iteration:

$ less /tmp/libgccjit-1Hywc0/fake.c.030t.tailr1

;; Function factorial (factorial, funcdef_no=0, decl_uid=53, symbol_order=0)

Symbols to be put in SSA form

{ D.88 }

Incremental SSA update started at block: 0

Number of blocks in CFG: 5

Number of blocks to update: 4 ( 80%)

factorial (signed int arg)

{

signed int stack$2;

signed int stack$1;

signed int stack$0;

signed int stack[8];

signed int stack_depth;

signed int x;

signed int y;

signed int mult_acc_1;

<unnamed type> _20;

signed int _21;

signed int _38;

signed int mul_tmp_44;

signed int mult_acc_51;

# arg_5 = PHI <arg_39(D)(0), _38(3)>

# mult_acc_1 = PHI <1(0), mult_acc_51(3)>

initial:

_20 = arg_5 <= 1;

_21 = (signed int) _20;

if (_21 != 0)

goto <bb 4> (instr9);

else

goto <bb 3> (instr4);

instr4:

/* DUP */:

_38 = arg_5 + -1;

mult_acc_51 = mult_acc_1 * arg_5;

goto <bb 2> (initial);

# stack$0_52 = PHI <arg_5(2)>

instr9:

/* RETURN */:

stack ={v} {CLOBBER};

mul_tmp_44 = mult_acc_1 * stack$0_52;

return mul_tmp_44;

}